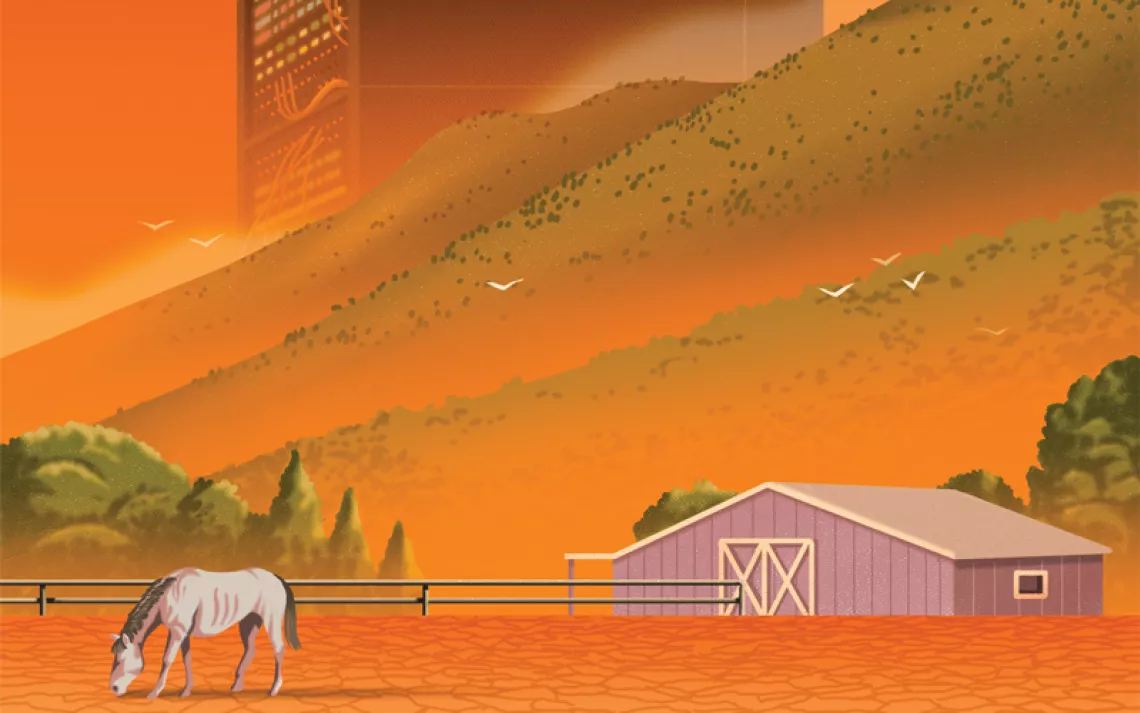

The Carbon Footprint of Amazon, Google, and Facebook Is Growing

How cloud computing—and especially AI—threaten to make climate change worse

IN MARCH The Information reported that Microsoft was in talks with OpenAI, the creator of ChatGPT, about spending an eye-popping $100 billion on a gargantuan data center in Wisconsin dedicated to running artificial intelligence software. Code-named “Stargate,” the data center would, at full operation, consume five gigawatts of electricity, enough to power 3.7 million homes. For comparison purposes, that’s roughly the same amount of power produced by Plant Vogtle, the big nuclear power station in Georgia that cost $30 billion to build.

Stargate is in the earliest of planning stages, but the sheer scale of the proposal reflects a truth about artificial intelligence: AI is an energy hog. That’s an embarrassing about-face for the technology industry. For at least 20 years, American electricity consumption has hardly grown at all—owing in part, say computer scientists, to steady advances in energy efficiency that have percolated out of the tech industry into the larger economy. In 2023, according to the US Energy Information Administration, total electricity consumption fell slightly from 2022 levels.

But according to a report published last December by Grid Strategies, a consultancy that advises on energy policy, multiple electric utilities now predict that US energy demand will rise by up to 5 percent over the next five years. One of the chief culprits responsible for the surge, say the utilities, are new data centers designed to run AI. To meet the growing demand for power, those utilities want to build new fossil fuel power plants and to dismantle climate legislation that stands in their way.

For environmentalists, this represents a giant step backward. Artificial intelligence was supposed to help us solve problems. What good are ChatGPT and its ilk if using them worsens global warming?

This is a relatively new story—the AI gold rush is still in its infancy, ChatGPT only having debuted in fall 2022. But computing’s energy demands have been growing for decades, ever since the internet became an indispensable part of daily life. Every Zoom call, Netflix binge, Google search, YouTube video, and TikTok dance is processed in a windowless, warehouse-like building filled with thousands of pieces of computer hardware. These data centers are where the internet happens, the physical manifestation of the so-called cloud—perhaps as far away from ethereality as you can get.

In the popular mind, the cloud is often thought of in the simple sense of storage. This is where we back up our photos, our videos, our Google Docs. But that’s just a small slice of it: For the past 20 years, computation itself has increasingly been outsourced to data centers. Corporations, governments, research institutions, and others have discovered that it is cheaper and more efficient to rent computing services from Big Tech.

The crucial point, writes anthropologist Steven Gonzalez Monserrate in his case study The Cloud Is Material: On the Environmental Impacts of Computation and Data Storage, is that “heat is the waste product of computation.” Data centers consume so much energy because computer chips produce large amounts of heat. Roughly 40 percent of a data center’s electricity bill is the result of just keeping things cool. And the new generation of AI software is far more processor intensive and power hungry than just about anything—with the notable exception of cryptocurrency—that has come before.

The energy cost of AI and its perverse, climate-unfriendly incentives for electric utilities are a gut check for a tech industry that likes to think of itself as changing the world for the better. Michelle Solomon, an analyst at the nonprofit think tank Energy Innovation, calls the AI power crunch “a litmus test” for a society threatened by climate change.

“Are we going to be able to grow the right way?” Solomon asks. “Or are we just going to keep coal plants online and build new gas plants?”

JUST THREE COMPANIES—Microsoft, Amazon, and Alphabet, Google’s parent corporation—are responsible for hosting two-thirds of the cloud. They are often referred to collectively as “hyperscalers,” a nod to the sheer size and sophistication of the data centers they are aggressively deploying. Stargate aside, current state-of-the-art data centers are major pieces of industrial infrastructure—campuses spread over hundreds of acres, consuming upwards of 100 megawatts. In addition to generating huge power bills, advanced data centers employ liquid cooling systems that can suck up millions of gallons of water daily.

None of the three companies would talk on the record for this story. But a review of the “sustainability” websites maintained by Microsoft, Amazon, and Alphabet reveals the paradox at the heart of AI energy consumption: Its negative consequences are not being acknowledged by corporations that proclaim themselves paragons of environmental responsibility.

Until recently, the hyperscaler triumvirate professed to be committed to a net-zero future, that magical point at which every ton of greenhouse gases for which they are responsible is offset by a ton not emitted elsewhere. (In July, Google abandoned that goal.) The companies all use the Greenhouse Gas Protocol process to track their emissions. And they all proudly report having spent billions of dollars supporting the build-out of renewable energy.

“The data center industry has been the biggest driver of renewables in the US,” says Dale Sartor, a now-retired Lawrence Berkeley National Laboratory scientist who worked for decades on energy efficiency in the sector. According to a report from S&P Global, the five big tech companies (Google, Amazon, Apple, Microsoft, and Meta, Facebook’s parent company) have been responsible “for over half of the global corporate renewables market”—about 45 gigawatts in total. Amazon is the market leader; as of 2022, it had contracted for more than 20 gigawatts of wind and solar. Google, which consistently gets higher grades from environmental organizations for living up to its clean energy commitments, reports that 64 percent of its electricity comes directly from renewable sources.

Historically, says Jonathan Koomey, a researcher who studies energy efficiency, the data center industry deserves credit for growing rapidly without causing undue strain on energy resources. A 2020 study he coauthored found that from 2010 to 2018, data storage increased 26-fold and the number of servers grew by 30 percent, but energy consumption ticked up by only 6 percent.

More broadly, Koomey cautions against taking utility forecasts of sharply rising demand at face value. Utilities make money by selling electricity, he notes. It is in their interest to forecast load growth that will incentivize regulators to authorize new power plants. “The utilities are very powerful, and they have a vested interest in pushing [the AI power surge] narrative,” he says.

Worldwide, data centers are believed to be responsible for 1 to 1.5 percent of global energy consumption. Koomey estimates that perhaps 10 percent of that total is attributable to AI. Meanwhile, according to the International Renewable Energy Agency, the heating and cooling of homes and businesses accounts for half of global energy consumption, and transportation for another 30 percent—all of which need to be electrified. Currently, only 8 percent of the grid is powered by renewable energy. A carbon-free future will require so much new renewable energy that maybe we should not get overly agitated about what data centers and AI are doing at the margins.

The energy cost of AI and its perverse, climate-unfriendly incentives for electric utilities are a gut check for a tech industry that likes to think of itself as changing the world for the better.

“If we are to accomplish our goals from a decarbonization and electrification standpoint,” Sartor says, “data center energy use is a drop in the bucket.”

Andrew Chien, a computer scientist at the University of Chicago who studies sustainability and cloud computing, disagrees. Data center defenders, he argues, are missing the true extent of the ongoing digital transformation of the global economy. In his view, the improvements Koomey points to are the result of a process in which super-efficient, highly sophisticated hyperscale data centers steadily replaced older, smaller, less efficient operations.

“The hyperscale data centers have been growing exponentially for probably 15 years,” Chien says. But the low-hanging fruit gained from pushing out the older data centers has now all been picked, while the overall pace of growth, turbocharged by AI, is continuing to accelerate. “I think we actually have a pretty severe problem.”

In 2023, Microsoft, Amazon, and Google reported that their combined spending on data centers surged in a single year, from $78 billion to $120 billion. Big Tech’s electricity appetite simultaneously skyrocketed. Google’s went from 10.5 terawatt-hours in 2018 to 22 terawatt-hours in 2022. Microsoft’s electricity consumption grew even more rapidly, from 11.2 terawatt-hours in 2020 to 18.6 in 2022. (Amazon does not release its energy consumption figures.)

Between 2021 and 2024, the number of data centers globally jumped from around 8,000 to 10,655, according to the Electric Power Research Institute. In March, a spokesperson for Amazon told The Wall Street Journal that the tech industry is building a new data center somewhere in the world every three days.

The near-doubling of Big Tech’s electricity bill in such a short period of time confirms a research finding reported by OpenAI in 2018, that the cost of “training” ChatGPT’s underlying software was doubling every three to four months. This means that using AI appears to be inordinately expensive. John Hennessy, the chairman of Alphabet, told Reuters in February 2023 that it was 10 times more expensive to ask Google AI a question than to use a traditional keyword search.

For anyone who has experienced the notorious inaccuracies and “hallucinations” while using ChatGPT or Google’s new AI Overview, the economics at play here are befuddling. What’s the business model for a product that costs more to produce but is less reliable than its predecessor? For industry vets like Koomey, who have seen multiple tech bubbles pop over the past few decades, it seems quite possible that the AI power problem will swiftly solve itself if AI fails to deliver a rate of economic return that makes continued exponential growth feasible.

TODAY, AI-PROPELLED data center growth is booming, nowhere more so than in Northern Virginia’s Data Center Alley, home to the world’s largest cluster of centers. Energy demand there, Chien says, “is growing at 20 percent a year, and there is no sign that it is slowing down.” In 2019, data centers required less than 10 percent of the grid electricity that serves Data Center Alley. Just four years later, according to local utility Dominion Energy, the share had jumped to 20 percent, and new customer orders indicate that data center capacity is set to double by 2028.

In a filing with regulators, Dominion asked for permission to build additional gas-fired power plants to meet the new demand. The utility has also explicitly supported a proposal by Glenn Youngkin, Virginia’s Republican governor, to withdraw from the Regional Greenhouse Gas Initiative, an agreement with neighboring states to reduce emissions.

Similar appeals for the extended use of fossil fuels are being made in other regions where data centers are clustered. In April, a paper released by the EFI Foundation, a nonprofit that purports to support “the transition to a low-carbon energy future,” noted that new gas-fired power plants would be necessary to meet “unprecedented” energy demand driven in part by AI. (The study was funded by the Southeast utility Duke Energy.) In Ohio, shortly after predicting a jump in electricity demand attributable to data centers, the CEO of Midwest utility AEP announced that the utility would sue the EPA to stop new regulations tightening environmental restrictions on coal-fired power plants. The backlash against environmental regulation supports Koomey’s thesis about electric utility self-interest: The utilities appear to be taking advantage of data center demand to pursue their own long-standing policy goals.

The technology companies that tout their renewable energy records have been notably silent with respect to the political consequences of data center growth. Amazon didn’t respond to questions about its position on Governor Youngkin weakening efforts to reach net zero. This wasn’t a huge surprise: In April 2023, Amazon lobbied against, and helped kill, a bill in Oregon that would have required data centers to source all their power from renewables.

Despite the obvious negative environmental externalities that their data center building boom is causing, Big Tech is forging ahead. “If AI raises their revenue high enough,” says computer scientist Roy Schwartz, who researches how to make AI less energy intensive, “they will gladly pay the energy bill.”

So why, faced with the unprecedented threat of climate chaos, are we allowing private sector corporations to dismantle our climate goals? “The only promises or assurances that we have that they will expand and grow sustainably are pledges that the tech companies make themselves,” Gonzalez Monserrate says. “There’s no one to intercede, no mechanism whatsoever to protect communities or the public interest except corporate goodwill.”

It’s not as if we lack evidence that regulation works. California passed its first legislation requiring power utilities to invest in renewable energy in 2002. The verdict is unambiguous: It has been a massive success. In spring 2024, California set a record of 45 straight days during which the supply of renewable energy sometimes exceeded electricity demand. Replicating California’s strategy at the national level would likely be the single most effective way to solve the AI power problem.

The incredible rise in data center energy consumption, however, is happening in the absence of any meaningful regulatory discipline. In the most recent Virginia legislative session, 17 bills aimed at imposing tighter regulation of the industry were introduced; every one of them was defeated or postponed. Similarly, Senator Ed Markey’s Artificial Intelligence Environmental Impacts Act of 2024, which would require the EPA to investigate how AI is contributing to climate change, has been stuck in committee since February. Meanwhile, some states are passing bills that will make matters even worse. Earlier this year in Maryland, in a move designed to make his state more attractive to the data center industry, Governor Wes Moore, a Democrat, pushed through legislation exempting backup diesel generators (which are used to keep data centers running during power outages) from environmental review.

Regulating an industry that has accrued as much power, wealth, influence, and capital as Big Tech is a challenge, Gonzalez Monserrate acknowledges. But he is heartened by how the current attention to AI’s electricity use is starting a conversation about the physical consequences of our migration into a data-center-mediated universe. “People are starting to finally understand,” he says, “that the cloud is material, and it has a cost.”

The Magazine of The Sierra Club

The Magazine of The Sierra Club